Handling Big Data with HBase Part 6: Wrap-up

Posted on December 19, 2013 by Scott Leberknight

This is the sixth and final blog in an introduction to Apache HBase. In the fifth part, we learned the basics of schema design in HBase and several techniques you can use to make scanning and filtering data more efficient. We also saw two different basic design schemes ("wide" and "tall") for storing information about the same entity, and briefly touched upon more advanced topics like adding full-text search and secondary indexes. In this part, we'll wrap up by summarizing the main points and then listing the (many) things we didn't cover in this introduction to HBase series.

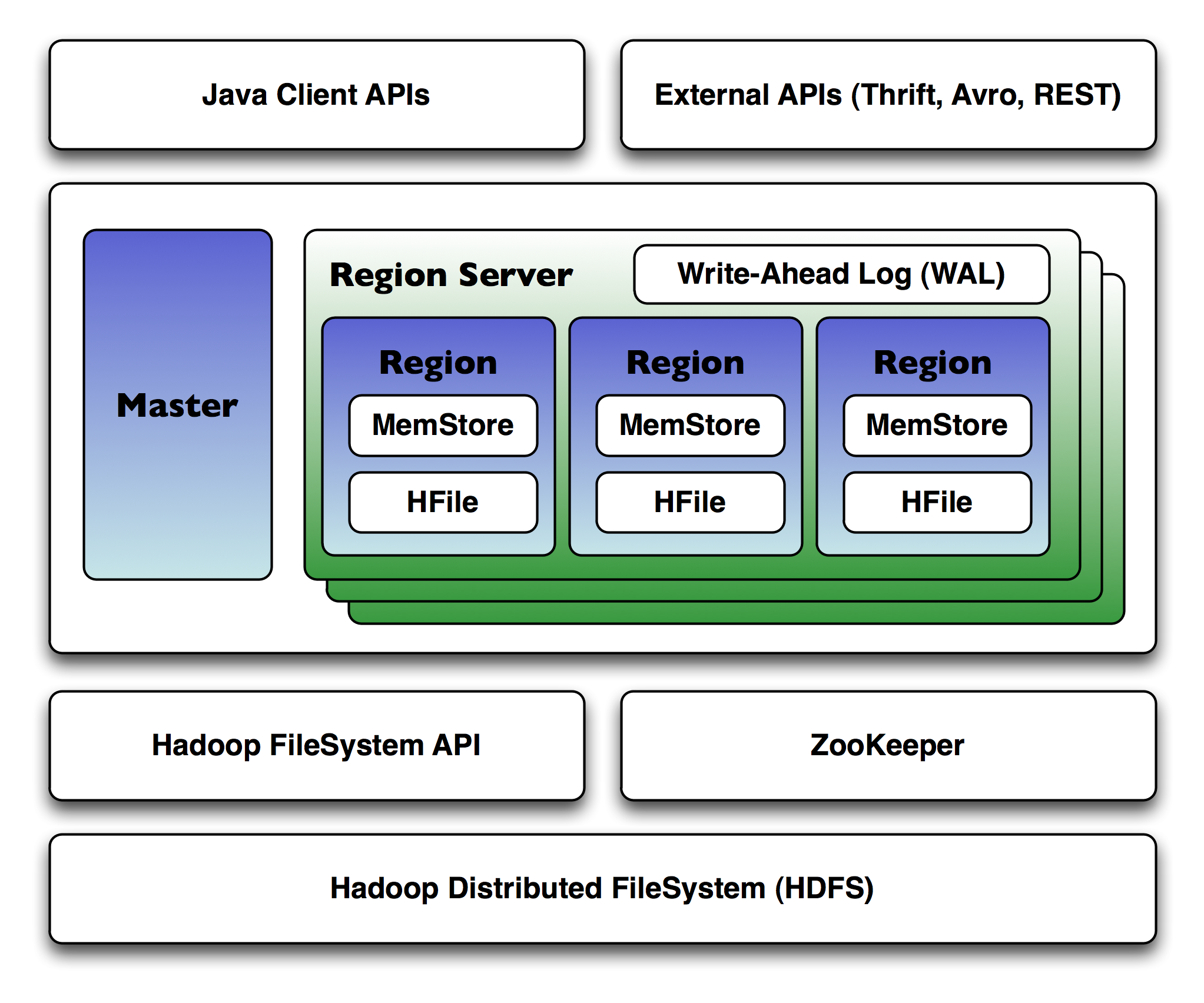

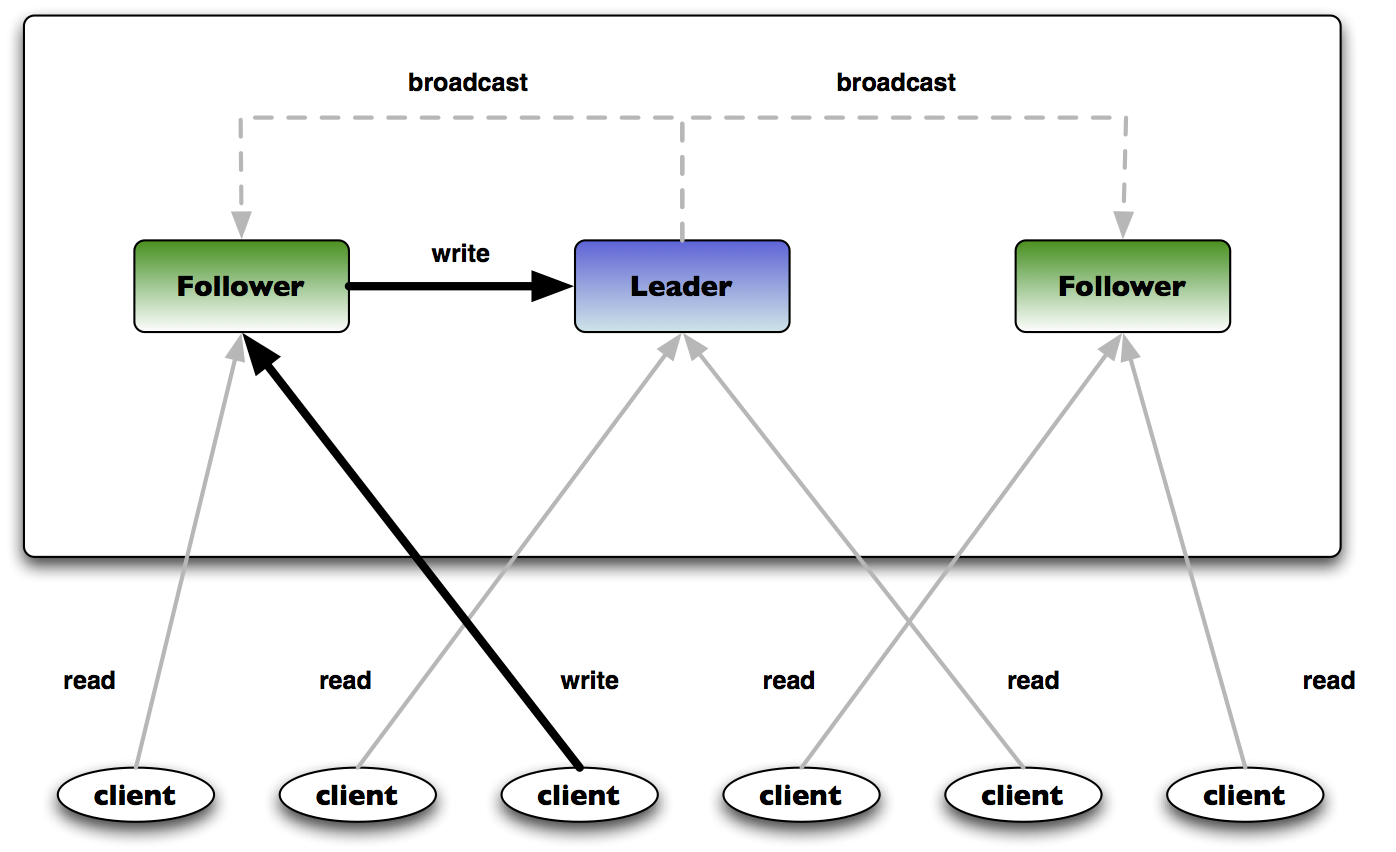

HBase is a distributed database providing inherent scalability, performance, and fault-tolerance across potentially massive clusters of commodity servers. It provides the means to store and efficiently scan large swaths of data. We've looked at the HBase shell for basic interaction, covered the high-level HBase architecture and looked at using the Java API to create, get, scan, and delete data. We also considered how to design tables and row keys for efficient data access.

One thing you certainly noticed when working with the HBase Java API is that it is much lower level than other data APIs you might be used to working with, for example JDBC or JPA. You get the basics of CRUD plus scanning data, and that's about it. In addition, you work directly with byte arrays which is about as low-level as it gets when you're trying to retrieve information from a datastore.

If you are considering whether to use HBase, you should really think hard about how large the data is, i.e. does your app need to be able to accomodate ever-growing volumes of data? If it does, then you need to think hard about what that data looks like and what the most likely data access patterns will be, as this will drive your schema design and data access patters. For example, if you are designing a schema for a weather collection project, you will want to consider using a "tall" schema design such that the sensor readings for each sensor are split across rows as opposed to a "wide" design in which you keep adding columns to a column family in a single row. Unlike relational models in which you work hard to normalize data and then use SQL as a flexible way to join the data in various ways, with HBase you need to think much more up-front about the data access patterns, because retrieval by row key and table scans are the only two ways to access data. In other words, there is no joining across multiple HBase tables and projecting out the columns you need. When you retrieve data, you want to only ask HBase for the exact data you need.

Things We Didn't Cover

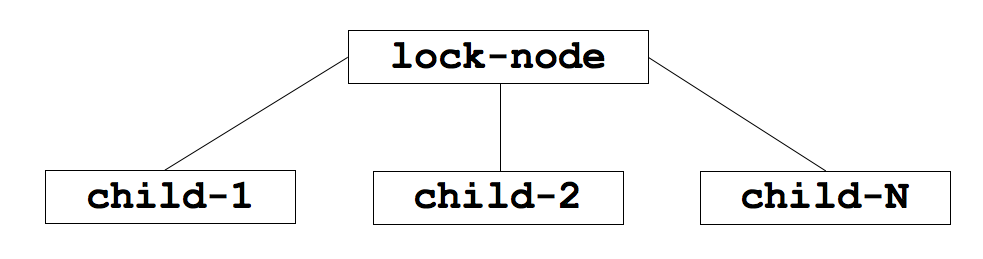

Now let's discuss a few things we didn't cover. First, coprocessors were a major addition to HBase in version 0.92, and were inspired by Google adding coprocessors to its Bigtable data store. You can, at a high level, think of coprocessors like triggers or stored procedures in relational databases. Basically you can have either trigger-like functionality via observers, or stored-procedure functionality via RPC endpoints. This allows many new things to be accomplished in an elegant fashion, for example maintaining secondary indexes via observing changes to data.

We showed basic API usage, but there is more advanced usage possible with the API. For example, you can batch data and provide much more advanced filtering behavior than a simple paging filter like we showed. There is also the concept of counters, which allows you to do atomic increments of numbers without requiring the client to perform explicit row locking. And if you're not really into Java, there are external APIs available via Thrift and REST gateways. There's also even a C/C++ client available and there are DSLs for Groovy, Jython, and Scala. These are all discussed on the HBase wiki.

Cluster setup and configuration was not covered at all, nor was performance tuning. Obviously these are hugely important topics and the references below are good starting places. With HBase you not only need to worry about tuning HBase configuration, but also tuning Hadoop (or more specifically, the HDFS file system). For these topics definitely start with the HBase References Guide and also check out HBase: The Definitive Guide by Lars George.

We also didn't cover how to Map/Reduce with HBase. Essentially you can use Hadoop's Map/Reduce framework to access HBase tables and perform tasks like aggregation in a Map/Reduce-style.

Last there is security (which I suppose should be expected to come last for a developer, right?) in HBase. There are two types of security I'm referring to here: first is access to HBase itself in order to create, read, update, and delete data, e.g. via requiring Kerberos authentication to connect to HBase. The second type of security is ACL-based access restrictions. HBase as of this writing you can restrict access via ACLs at the table and column family level. However, HBase Cell Security describes how cell-level security features similar to Apache Accumulo are being added to HBase and are scheduled to be released in version 0.98 in this issue (the current version as of this writing is 0.96).

Goodbye!

With this background, you can now consider whether HBase makes sense on future projects with Big Data and high scalability requirements. I hope you found this series of posts useful as an introduction to HBase.

References

- HBase web site, http://hbase.apache.org/

- HBase wiki, http://wiki.apache.org/hadoop/Hbase

- HBase Reference Guide http://hbase.apache.org/book/book.html

- HBase: The Definitive Guide, http://bit.ly/hbase-definitive-guide

- Google Bigtable Paper, http://labs.google.com/papers/bigtable.html

- Hadoop web site, http://hadoop.apache.org/

- Hadoop: The Definitive Guide, http://bit.ly/hadoop-definitive-guide

- Fallacies of Distributed Computing, http://en.wikipedia.org/wiki/Fallacies_of_Distributed_Computing

- HBase lightning talk slides, http://www.slideshare.net/scottleber/hbase-lightningtalk

- Sample code, https://github.com/sleberknight/basic-hbase-examples