Distributed Coordination With ZooKeeper Part 4: Architecture from 30,000 Feet

Posted on July 07, 2013 by Scott Leberknight

This is the fourth in a series of blogs that introduce Apache ZooKeeper. In the third blog, you implemented a group membership example using the ZooKeeper Java API. In this blog, we'll get an overview of ZooKeeper's architecture.

Now that we've test driven Apache ZooKeeper in the shell and Java code, let's take a bird's eye view of the ZooKeeper architecture, and expand on the core concepts discussed earlier. As previously mentioned, ZooKeeper is essentially a distributed, hierarchical filesystem comprised of znodes, which can be either persistent or ephemeral. Persistent znodes can have chidren, whereas ephemeral nodes cannot, and persistent znodes persist after client sessions expire or disconnect. In contrast, ephemeral nodes cannot have children, and they are automatically destroyed as soon as the session in which they were created is closed. Both persistent and ephemeral znodes can have associated data, however the data must be less than 1MB (per znode). All znodes can optionally be sequential, for which ZooKeeper maintains a monotonically increasing number which is automatically appended to the znode name upon creation. Each sequence number is guaranteed to be unique. Finally, all znode operations (reads and writes) are atomic; they either succeed or fail and there is never a partial application of an operation. For example, if a client tries to set data on a znode, the operation will either set the data in its entirely, or no data will be changed at all.

A key element of ZooKeeper's architecture is the ability to set watches on read operations such as exist, getChildren, and getData. Write operations (i.e. create, delete, setData) on znodes trigger any watches previously set on those znodes, and watchers are notified via a WatchedEvent. How clients respond to events is entirely up to them, but setting watches and receiving notifications at some later point in time results in an event-driven, decoupled architecture. Suppose client A sets a watch on a znode. At some point in the future, when client B performs a write operation on the znode client A is watching, a WatchedEvent is generated and client A is called back via the processResult method. Client A and B are completely independent and need not even know anything about each other, so long as they each know their own responsibilities in relation to specific znodes.

Important to remember about watches is that they are one-time notifications about changes to a znode. If a client receives a WatchedEvent notification, it must re-register a new Watcher if it wants to be notified about future updates. During the period between receipt of the notification and re-registration, there exists the possibility that other clients could perform write operations on the znode before the new Watcher is registered which the client would not know about. In other words, it is entirely possible in a high write volume environment that a client can miss updates during the time it takes to process an event and re-register a new watch. Clients should assume updates can be missed, and not rely on having a complete history of every single event that occurs to a given znode.

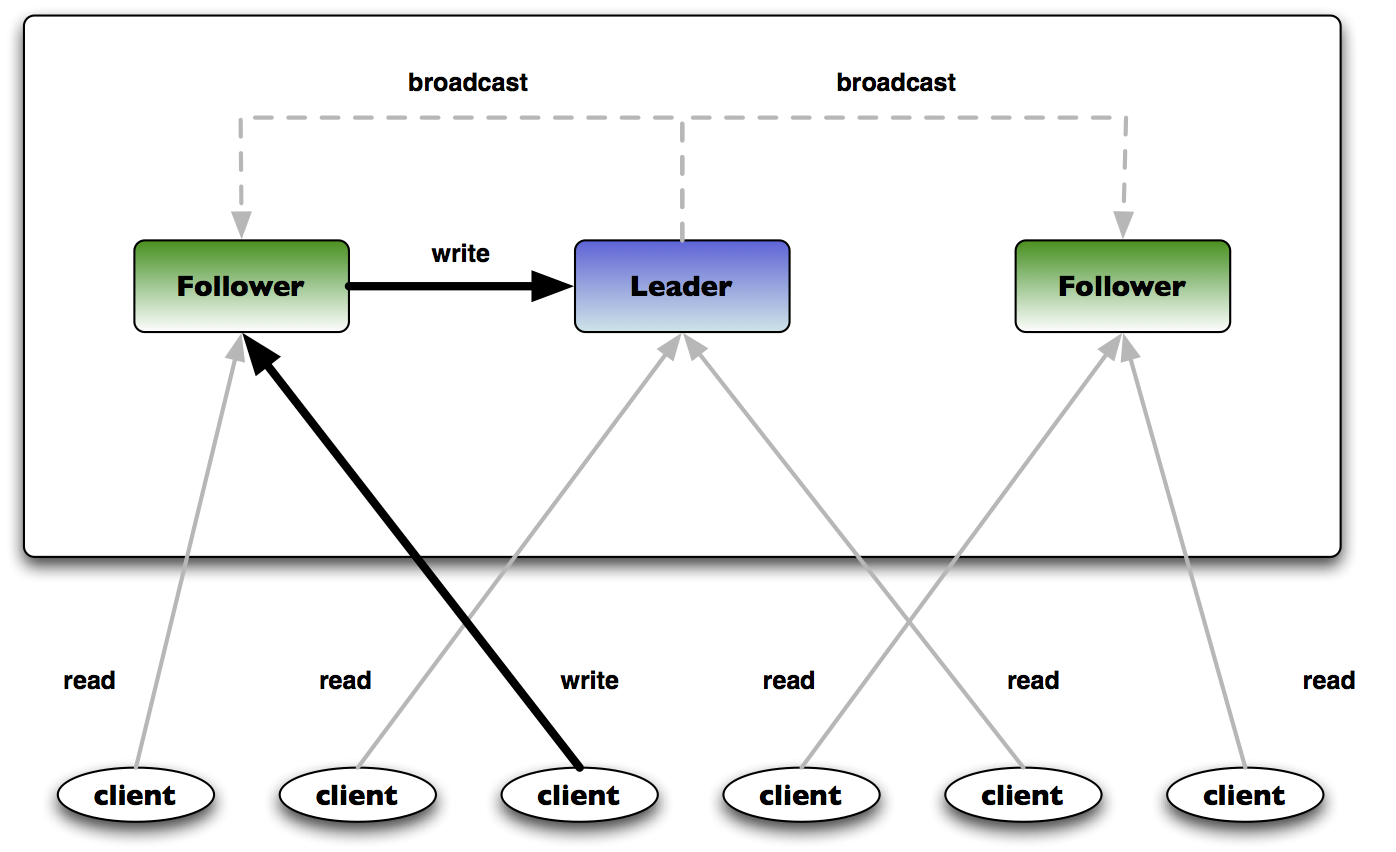

ZooKeeper implements the hierarchical filesystem via an "ensemble" of servers. Figure 1 shows a three server ensemble with multiple clients reading and one client writing. The basic idea is that the filesystem state is replicated on each server in the ensemble, both on disk and in memory.

Figure 1 - ZooKeeper Ensemble

In Figure 1 you can see one of the servers in the ensemble acts as the leader, while the rest are followers. When an ensemble is first started, a leader election is held. During leader election, a leader is elected and the process is complete onces a simple majority of followers have synchronized their state with the leader. After leader election is complete, all write requests are routed through the leader, and changes are broacast to all followers - this is termed atomic broadcast. Once a majority of followers have persisted the change (to disk and memory), the leader commits the change and notifies the client of a successful update. Because only a majority of followers are required for a successful update, followers can lag the leader which means ZooKeeper is an eventually consistent system. Thus, different clients can read information about a given znode and receive a different answer. Every write is assigned a globally unique, sequentially ordered identifier called a zxid, or ZooKeeper transaction id. This guarantees a global order to all updates in a ZooKeeper ensemble. In addition, because all writes go through the leader, write throughput does not scale as more nodes are added.

This leader/follower architecture is not a master/slave setup, however, since the leader is not a single point of failure. If a leader dies, then a new leader election takes place and a new leader is elected (this is typically very fast and will not noticeably degrade performance, however). In addition, because leader election and writes both require a simple majority of servers, ZooKeeper ensembles should contain an odd number of machines; in a five node ensemble any two machines can go down and ZooKeeper can still remain available, whereas a six node ensemble can also only handle two machines going down because if three nodes fail, the remaining three are not a majority (of the original six).

All client read requests are served directly from the memory of the server they are connected to, which makes reads very fast. In addition, clients have no knowledge about the server they are connected to and do not know if they are connected to a leader or follower. Because reads are from the in-memory representation of the filesystem, read throughput increases as servers are added to an ensemble. But recall that write throughput is limited by the leader, so you cannot simply add more and more ZooKeepers forever and expect performance to increase.

Data Consistency

With ZooKeeper's leader/follower architecture in mind, let's consider what guarantees it makes regarding data consistency.

Sequential Updates

ZooKeeper guarantees that updates are made to the filesystem in the order they are received from clients. Since all writes route through the leader, the global order is simply the order in which the leader receives write requests.

Atomicity

All updates either succeed or fail, just like transactions in ACID-compliant relational databases. ZooKeeper, as of version 3.4.0, supports transactions as a thin wrapper around the multi operation, which performs a list of operations (instances of the Op class) and either all operations succeed or none succeed. So if you need to ensure that multiple znodes are updated at the same time, for example if two znodes are part of a graph, then you can use multi or the transaction wrapper around multi.

Consistent client view

Consistent client view means that a client will see the same view of the system, regardless of which server it is connected to. The offical ZooKeeper documentation calls this "single system image". So, if a client fails over to a different server during a session, it will never see an older view of the system than it has previously seen. A server will not accept a connection from a client until it has caught up with the state of the server to which the client was previously connected.

Durability

If an update succeeds, ZooKeeper guarantees it has been persisted and will survive server failures, even if all ZooKeeper ensemble nodes were forcefully killed at the same time! (Admittedly this would be an extreme situation, but the update would survive such an apocalypse.)

Eventual consistency

Because followers may lag the leader, ZooKeeper is an eventually consistent system. But ZooKeeper limits the amount of time a follower can lag the leader, and a follower will take itself offline if it falls too far behind. Clients can force a server to catch up with the leader by calling the asynchronous sync command. Despite the fact that sync is asynchronous, a ZooKeeper server will not process any operations until it has caught up to the leader.

Conclusion to Part 4

In this fourth blog on ZooKeeper you saw a bird's eye view of ZooKeeper's architecture, and learned about its data consistency guarantees. You also learned that ZooKeeper is an eventually consistent system.

In the next blog, we'll dive back into some code and use what we've learned so far to build a distributed lock.

References

- Source code for these blogs, https://github.com/sleberknight/zookeeper-samples

- Presentation on ZooKeeper, http://www.slideshare.net/scottleber/apache-zookeeper

- ZooKeeper web site, http://zookeeper.apache.org/